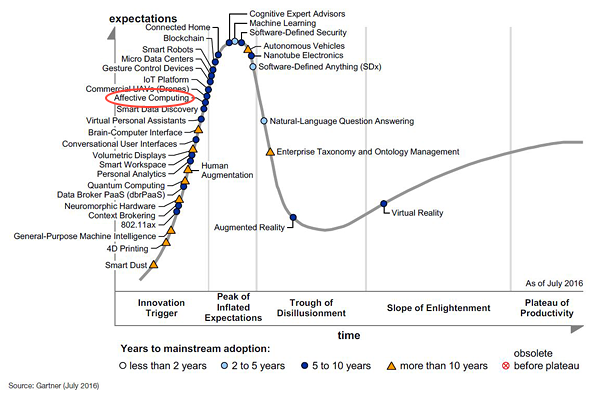

We are assisting a Disruptive Era. Technology and computer advances are being game changers in the past decades. Computers now have the ability of speaking with you, understanding what you tell them and being able of giving coherent answers, they can order taxis and food for you – Alexa, Amazon –, a device can track your tempo while running and play music according to the running tempo you have in a precise moment – Running, Spotify –, you can even scan your bank card with your phone and pay directly passing your phone through the card machine – Apple Pay, Apple –. Nowadays, computers are starting to gain the capability of expressing and recognizing affect and emotions, and may soon acquire the ability of “having emotions”. This is known as Affective Computing. Affective computing is located in the Gartner Hype Cycle in the Innovation Trigger and categorized in the 5 to 10 years to mainstream adoption.

Gartner Hype Cycle

Gartner Hype Cycle

However, what is the unfamiliar term of affective computing? Affective computing is defined as the analysis and development of systems and devices that can interpret, understand, recognize and simulate human emotions, facilitating empathy in the human-computer interaction. Affective computing is purely human-computer interaction, a device acquires the ability of detecting and responding in an adequate way according to the user’s emotions and other stimuli, being able to gather cues to user emotion from a variety of sources: facial expressions, body gestures and postures, vocal nuances, hand movement, speech, force and rhythm of the key strokes and body temperature and other physiological signals. All this sources can signify changes in the user’s emotional state that computers can understand and interpret, for instance, as frustration, curiosity, anger, joy, grief, fear, etc… For this, affective computing is trying to delegate to computers the human-like abilities of interpretation, generation of affect features, observation and appreciation. The role of emotion in both, human perception and cognition will not only facilitate computers achieve a better performance when assisting humans, but it will also allow computers to make decisions based in data and, fundamentally, based in emotions perceived. Given the fact that scientific principles derive from rational thoughts, logical arguments, testable hypothesis and repeatable experiments, emotions have never been well-recognized in science have been considered intrinsically non-scientific. Nonetheless, with the ongoing development of emotional intelligence applied in devices and computers, emotions are starting to play a major role and leaving the marginalized state in which they were years ago.

Affective computing encompasses two main areas: detection and recognizing emotional information and the interpretation of this emotions in machines. The detection of emotional information begins with the use of passive sensors like cameras for the facial expressions and body gesture and posture, microphone for the speech detection and other sensors that serve to measure and detect emotional cues such as skin temperature. When it comes to recognizing emotional information the common practice is to extract meaningful patterns from the data using machine learning modalities such us speech recognition, facial expression detection, among others. The other area within affective computing, emotions in machines, consists on the designing of computational devices proposed to exhibit either innate emotional capabilities or that are capable of convincingly simulating emotions.

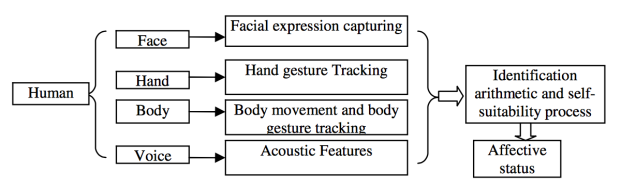

All in all, the procedure of affective computing and the affective interaction that it entails consists of affect information capture and modelling, affect understanding and expression, etc…

People tend to show affects through a series of actions on facial expression, body gestures and movements, behavior shown in voice, heart rate, temperature and other actions specified before. Affective computing analyzes this actions compiling data with different algorithms, using machine learning and different technologies. Emotional speech processing, by speech synthesis; facial expression analysis, done with audio visual mapping; and body gesture and movement, apparentness methods and 3D modeling methods, are examples of how to interpret human affects.

How would your computer interact with you when you look frustrated? How is the computer or device going to know that you are frustrated or upset in that precise moment? Could you phone know when you feel anger or grief after a call? To understand how this is going to be possible we should first understand the technical facet of affective computing:

- Affective computing sensing & analysis works with a series of algorithms and features for the detection and recognition of the emotional state from face and body gestures. Two main algorithms are: LDC, grouping happens based on the value achieved from the linear combination of the feature values, provided as vector features; k-NN, categorization happens by positioning the object in the feature space, and associating it with the k nearest neighbours (training examples).

- Emotion recognition through analysis of text and spoken language.

- Analysis of emotional speech processing from prosody and voice quality.

- Affective state recognized from central and peripheral physiological measures (i.e. Galvanic Skin Response)

- Multi-modal recognition of affective state

- Computational models of human emotion processes (i.e. decision making models influences by emotions, predictive models of user state)

- Emotional profiling

The figure above shows a simplification of how to achieve the affective status.

The figure above shows a simplification of how to achieve the affective status.

Game Changer

As said before, science has always been about facts, rational and logical argumentation and thinking, testable hypothesis and repeatable experiments, emotions have never played a major role in science or any other facet of technology, it has always been about processes, algorithms, mathematical models. But, why? Being a fundamental part of human experience, why marginalizing a major aspect of life, the emotions? The answer is simple: it seemed too complex to quantify emotions and no technology has ever existed that could measure and read them. As showed above, is not something a two-year-old could do, not even a scientist in most cases. This is the reason why, in the Era that we are living, where science and scientific facts is all that matters, Affective Computing is changing the rules of the game. The inclusion of emotions in science will open a whole new perspective full of possibilities, giving the ability to science and technology to acquire a human perspective. For instance, e-learning education could now enable the learning device to have the ability of detecting when the learner is having difficulties getting to understand the concept being explained so that it can stop automatically at the point where the learner is struggling to explain the concept the times needed or even give a deeper explanation, or e-therapy could help provide psychological health services online and be as successful as in-person counseling. Affective computing is going to be a game changer not only for science in general but for specific existing and emergent technologies. Social agents, robotics and machine learning are examples of technologies that could improve by taking advantage of affective computing.

Affective computing could also make an impact in society itself. Apart from the great impact on science and technology, society can also take advantage directly. How? This disruptive technology could make the public spaces safer – assuming it could overcome the common privacy and security issues – affective computing could help security forces by determining when someone has malicious intentions. A significant impact in corporations could happen if they took advantage if the technology for learning from their employees. Having the ability to determine whether if the employees feel comfortable or not with the work they are going or if they are in the right state of mind to do the work they are assigned.

Barriers to Disrupt

Even though affective computing is having an increasing impact on technology, society and corporations, everything new and emergent entails a series of barriers. As always, the main issue is always the ethical and privacy aspect. The technology can be seen by some people as intrusive. If formerly the privacy and data appropriation was a top concern for a vast majority segment of the society, now that devices are going to be able to know how we feel, how is people going to accept that? Even if the main purpose of the device or computer of knowing our emotions is to improve the customer experience, some people are not too keen to accept that even the devices they use are going to how they feel. Another ethical issue for this technology is that it infringes autonomy when applying it to the customer journey of a customer at a supermarket or fashion shop, for instance. By analyzing how you feel and adapting the customer experience to the emotions, the service might avoid to offer some services or products available, meaning this that because of affective computing technology, a customer is being misinformed of the choices and opportunities they have. A way of accomplishing the correct ethics for the technology is respecting the clients’ autonomy. It might seem a bit far-fetched from technology nowadays, but respecting the customer journey by just suggesting how their journey will be better according to their emotions without misinforming about the alternatives, maybe by offering different options or directly showing the whole market availability, the customer experience will be completely improved and correct, according to the actual ethical concerns. Despite people’s opinion on how intrusive the technology is to their privacy, the reality is that it will decrease the intrusiveness of human-machine interface technology and make the technology more adequate to people because of its more natural interactions and its seamless presence in the environment.

Additional barrier to the adoption of this technology is the lack of information given to the people. This is a big problem when it comes to the adoption of an emerging technology, because we can all presume of being early-adopters, but who is truly keen to adopt an up-and-coming technology like affective computing without being perfectly informed and, of course, without seeing it implemented before? Not too many. The lack of information given to the ordinary people (not scientists and engineers) and the meager number of sources (compared to other emergent and disruptive technologies like Internet of Things) leads to the difficulty of adopting. Everybody can lie in the commodity of not implementing something we do not know is actually prodigious. But, again, over the time this barrier will be over came. Time equals progression, and on these days, the progression of disrupting technologies is going faster than time. The more time this technology exists and improves, the more research it will be done and, as a matter of fact, the more informed people will be, leaving the commodity – no-change seeking – people live with when uninformed.

Apart from the ethical and lack of information barriers to this technology, one important and unknown barrier, for the time being, to the adoption of this technology is the cost that it could entail the implementation in the different industries. How much would it cost to implement affective technology into a device or machine? What about the implementing it in wearable technologies? This is something yet to be determined.

Industries

Generally, the adoption of emergent technologies as disruptive as this one is performed by well-implemented companies or sectors that are looking to always innovate and keep up to date with the up-and-coming technologies. When considering what companies or industries should, and probably would, integrate the technology into their products one is common to think of is the automotive industry.

The automotive industry is always reinventing itself, who would have thought of a car driving itself if the driver felt asleep unintendedly? Or a car parking without the driver touching the steering wheel? Or even better, a car advising on speed based on the road?

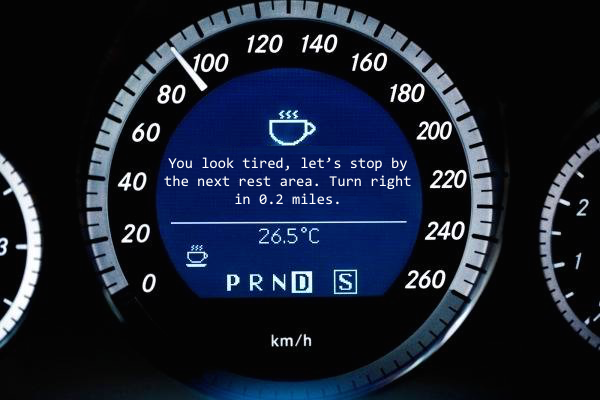

This is what the automotive industry has been up to in the last years. When an impactful technology such us this one is, is well defined and fully functional, the automotive industry will be one of the pioneers on adopt it, especially the ones that are more technology-oriented, such as Tesla. Tesla defines itself as an innovative company selling the perfect car: technological innovative and environmental. The way Tesla announces itself gives an idea on why would Tesla

want to implement affective computing. Furthermore, Tesla knows that the type of car they are selling is a completely innovative facet for the industry, therefore, they increase to its best what every customer looks in a car: exclusivity, comfortability and, among all, security. Apart from the fact that innovative technology is their main objective and giving the ability to a Tesla car of knowing if the driver has any necessity, such as food or something to drink, by perceiving that the driver is hungry or thirsty with affective technology, and being able to give recommendations on where to stop by to eat or drink something without the driver having to bother for asking, with affective computing Tesla could improve their security. By analyzing the body language, movements, gestures, the sounds the driver makes or, even the way the driver is controlling the car, affective computing implemented in Tesla cars could give the driver recommendations. For instance, if the car’s affective computing system analyzes the driver and denotes that it shows evidence of fatigue, the car could display a message in the front window or, directly, talk to the driver, recommending stopping by the next rest area.

Car’s dashboard illustrating message when it recognizes that the driver is tired.

Even though Tesla presumably will be the pioneer of the movement of adapting the technology, we can consider the members of the automotive industry to be “Fashion victims”, meaning this that once the pioneer adopts the technology, starts the testing and seems to work, probably the main car companies like Mercedes-Benz, BMW, Audi, etc … will adopt the technology and even try to improve it or find for it a better application than the pioneer’s. This is not something new, it has been historically proved since the beginnings of the car existence. The competitors that will surge will not only come from the automotive industry. The multinational technology companies will also become competitors whenever it comes to adopt technology. By multinational technology companies it is meant to make reference to companies like IBM, Microsoft or Intel, which in the past years have been leading developing new technologies. The way this enterprise could compete with the most innovative company from the automotive industry is by being a provider of the technology to the different competitor in the same sector.

The enterprises will have to adopt a series of competences in order to have the ability of implementing affective technology into their processes. For this, technology-innovative companies like Tesla will have less problem, but the not-technology-driven companies will have to acquire a whole new innovative approach throughout the whole company, including: creativity, this means generating ideas, critical thinking and creative problem solving; enterprising, which involves seeking improvement, being technology savvy and independent thinking; integrating perspectives, engaging and collaborating, openness to ideas; forecasting, including this having vision, perceiving systems and managing the future; and lastly managing change, which includes intelligent risk taking, reinforcing change and sensitivity to situations.

A common error to perform when trying to innovate is only innovating in one process. When trying to innovate a company should reinvent all its antiquated processes.

Disruption

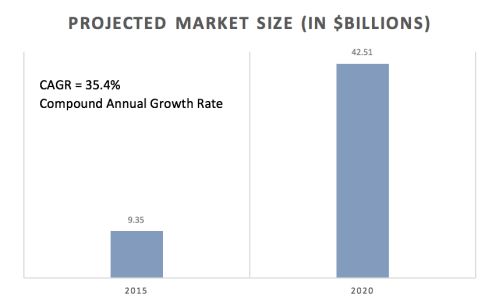

There is no doubt that affective computing is an emergent technology with a fast pace of development and growth. The following projected market size bar graph of the technology shows that the level of disruption of this technology will be noticeable throughout the next years.

Comparison between the actual market size of Affective Computing and the projected market size in 2020

First of all, what is disruptive innovation? Clayton Christensen describes disruptive innovation in his book The Innovator’s Dilemma as “a process by which a product or service takes root initially in simple applications at the bottom of a market and then relentlessly moves up market, eventually displacing established competitors”. This definition places Affective Computing as a disruptive technology.

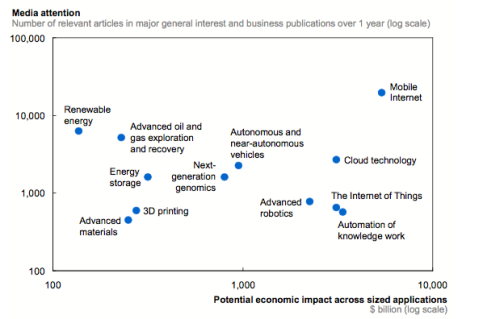

The robotics sector could be the one to be disrupted by Affective Computing. Now, robotics can serve you in different ways: they can automotive work to make it more efficient, robots can work as secretaries having the ability of setting appointments in your calendar, or even work as tour guides by suggesting places to visit or where to find the nearest Italian restaurant. But up to the date, no robot – not even the well-known home-robot Alexa – had the ability of understanding how you really feel without you saying what you do, robotic devices could automotive work to make it more efficient but no robot could perform a job in a humanized manner. Said this, emotional intelligence applied to robots (Affective Computing) will be a must have for any developed robots, disrupting the advanced robotics industry. The disruption of this sector would not be unnoticed, the size of the sector is important and the potential economic impact across sized applications is considered to be by a McKinsey & Company study on of the leading among other emergent technologies.

Relation between the general interest and the economic impact across sized applications of leading emergent technologies

Relation between the general interest and the economic impact across sized applications of leading emergent technologies

By affecting the advanced robotics industry, it will create a “domino effect” affecting other industries where advanced robotics are implemented, like healthcare or the multinational technology companies that are starting to implement robots as products or services. Especially the healthcare industry will be affected by the disruption of advanced robotics, the adoption of Affective Computing into their surgical robots or robotics prosthetics will be a major issue to solve. After all, what hospital would not like a robot that could perform a surgery with the same feelings and emotions that a top-performer surgeon has?

All things considered, Affective Computing is a technology with the potential of dramatically changing the status quo, transforming how people live and work, creating new opportunities and driving growth comparative advantage for nations.

Please, feel free to help support further research of Affective Computing by completing the following survey: Affective Computing Research Survey.

References

Christenson, C. (2012) Disruptive innovation. Available at: http://www.claytonchristensen.com/key-concepts/ (Accessed: 28 February 2017).

Gartner (2016) Hype cycle research methodology. Available at: http://www.gartner.com/technology/research/methodologies/hype-cycle.jsp (Accessed: 28 February 2017).

Hoven, den, Jeroen, Blaauw, M., Pieters, W. and Warnier, M. (2014) Privacy and information technology. Available at: https://plato.stanford.edu/entries/it-privacy/ (Accessed: 28 February 2017).

Marr, B. (2016) What is Affective computing and how could emotional machines change our lives? Available at: https://www.forbes.com/sites/bernardmarr/2016/05/13/what-is-affective-computing-and-how-could-emotional-machines-change-our-lives/#4a5f4961e580 (Accessed: 28 February 2017).

Murgia, M. (2016) Affective computing: How ‘emotional machines’ are about to take over our lives. Available at: http://www.telegraph.co.uk/technology/2016/01/21/affective-computing-how-emotional-machines-are-about-to-take-ove/ (Accessed: 28 February 2017).

Research & Markets (2016) Affective computing market 2015 – technology, software, hardware, vertical, & regional forecasts to 2020 for the $42 Billion industry. Available at: http://www.prnewswire.com/news-releases/affective-computing-market-2015—technology-software-hardware-vertical–regional-forecasts-to-2020-for-the-42-billion-industry-300144024.html (Accessed: 28 February 2017).

Tam, R. (2016) McKinsey global institute: Disruptive technologies: Advances that will transform life, business, and the global economy. Available at: http://www.mckinsey.com/~/media/McKinsey/Business%20Functions/McKinsey%20Digital/Our%20Insights/Disruptive%20technologies/MGI_Disruptive_technologies_Full_report_May2013.ashx (Accessed: 28 February 2017).

Tao, J. and Tan, T. (2011) ‘Affective computing: A review’, in Affective Computing and Intelligent Interaction. Springer Nature, pp. 981–995.

Tesla Motors (2017) Tesla UK. Available at: https://www.tesla.com/en_GB/?redirect=no (Accessed: 28 February 2017).

University of Cambridge (2015) Computer laboratory: Emotionally intelligent interfaces. Available at: http://www.cl.cam.ac.uk/research/rainbow/emotions/ (Accessed: 28 February 2017).

Väyrynen, Röning and Alakärppä (2009) Human Technology: An Interdisciplinary Journal on Humans in ICT Environments, 2(1). doi: 10.17011/ht/urn.201506212392.

Great analysis of the impacts of affective computing! I especially love how you explained the “domino effect” so well. It is indeed ironic how when it comes to robots that are on the surface incredibly mechanical and lacking of emotions, the robotics industry would experience the biggest impact of affective computing. And subsequently, this prior impact on the robotics industry would be propagated for other industries utilising robots. I think affective computing is both very fascinating and dangerously intrusive when it comes to personal data, especially when wearable equipment is collecting information on our physiological signals. Companies waiting to adopt this technology would surely have to prepare for tons of legal issues in future.

LikeLike

Alexander Escobar

A great explanation about What Affective Computing is. This post really helps me to understand many variables about how this emerging technology can affect the worldwide. It is really curious how all things considered can change “the status quo” and generate opportunities and challenges in the economy.

LikeLike

Wow! I found really interesting this topic. This is an emergent technology of which society is not very aware. Personally, I have heard about it not more than three times. Great clarification of this with the graph showing the big economic impact Affective Computing has and the small society impact it has up to date. In my opinion, people should be aware about it and about the consequences it can entail.

Good job! I really enjoyed it.

LikeLike

Good post. It is surprising how the economic impact is has can pass unnoticed, an expected annual compound growth rate of 35% from now until 2020 is something not every technology can presume of. Notwithstanding the fact that is a projected growth, who knows if it is actually going to grow more… All in all, affective computing is an emergent technology that is going to bring surprises along with its exponential growth pace. Descriptive and well informed blogpost.

LikeLike

Great read! Personally, I don’t feel comfortable having systems or devices take advantage of my emotions for marketing purposes. As Thingification (Internet of things) begins to rise, appliances, cars and smart phones will be connected and can relay messages, messages even our emotions will be linked to one another. When you’re upset about the cold temperature for instance, affective computing analyzes your facial expression or even goosebumps on your skin and the heater may automatically turn on. I find that fascinatingly scary.

Also, as you covered, computers can potentially have human-emotions scares me, however, such technology is still quite far in the future. I think current technology, systems, robots, devices or computers do not really “understand” or “empathize,” as you have mentioned. I agree that in an abstract sense, they do “understand” data however, they are just processing data that they are programmed to do, with no human-like emotions or understanding. When talking about AI, experts often debate over the word “understand,” but it is possible for future technology to have emotions.

LikeLike